Hello fellow keepers of numbers,

We’re now in the season of giving. And in the spirit of giving, the New York Times (NYT) has given OpenAI quite the legal headache. OpenAI is expected to turn over more than 20 million de-identified chat conversations as part of the NYT court case. Also in the spirit of giving, OpenAI has given us a warmer and friendlier AI model than the cold and aloof GPT-5. Lastly, Scribe launched Scribe Optimize to help their customers identify process optimization opportunities and estimate ROI.

THE LATEST

OpenAI launches GPT 5.1

Source: Google Gemini Nano Banana

OpenAI announced and rolled out GPT-5.1, featuring two variants: GPT-5.1 Instant for everyday conversations and GPT-5.1 Thinking for complex reasoning. The update introduces improved instruction following, reduced technical jargon, and new personality presets including Professional, Friendly, Candid, Quirky, Efficient, Nerdy, and Cynical.

In a separate announcement for developers, OpenAI detailed API improvements focused on automation. GPT-5.1 introduces a new "none" reasoning mode that works like a non-reasoning model for speed while maintaining high intelligence and better tool calling. The company overhauled how the model allocates thinking tokens. On straightforward tasks, GPT-5.1 uses fewer tokens for faster responses and lower costs, while remaining persistent on difficult problems.

The rollout begins with paid subscribers, with API access available this week. Pricing remains unchanged at $1.25 per million input tokens and $10 per million output tokens. Legacy GPT-5 will remain available for three months.

Why it’s important for us:

This was one of the quieter updates from OpenAI in recent memory. They received a lot of backlash from users when GPT-5 rolled out because many had grown used to the communication style of GPT-4o or other variants. It seems like this is an effort to cater to those users’ complaints and provide a communication style closer to that of GPT-4o.

For those who were familiar with both GPT-4o and GPT-5, you probably noticed that GPT-5 was more verbose, technical, and “cold.” If you’re someone who cares about the communication style from your AI model, this might be a somewhat significant update for you.

In my early testing with GPT-5.1, it does seem to be better at communicating in natural language with slightly more concise responses. However, I haven’t noticed any sort of performance difference.

One of my biggest complaints with ChatGPT right now (especially when compared to Claude Sonnet 4.5) is it often writes extremely long, verbose responses to questions or requests that I feel should be straightforward. I feel like I’m reading a ChatGPT mini-novel while Claude responds in 1-2 short paragraphs for the same question.

You can “train” your AI model on your preferred communication style through memories, personalization, and prompts. However, I find I prefer the out-of-the-box Claude communication and performance over ChatGPT at the moment.

Scribe announces Optimize to map workflows and improve processes

Scribe announced Scribe Optimize, a new platform that automatically maps workflows across the company to identify and rank automation opportunities by ROI potential. Optimize continuously monitors real work patterns across approved apps, then provides prioritized recommendations with prebuilt business cases and implementation plans.

The announcement comes alongside a $75 million Series C funding round at a $1.3 billion valuation. The platform builds on Scribe Capture, which has documented over 10 million workflows across 40,000 applications for 5 million users including 94% of Fortune 500 companies. Users of Scribe Capture report saving 35 hours per person per month.

Optimize captures workflows only for designated users who give consent and only within business apps that admins approve. The system automatically detects where time is spent, what slows teams down, and where opportunities exist without requiring employees to manually start or stop recording.

CEO Jennifer Smith noted in the announcement that 95% of AI initiatives fail because "AI can't optimize what it can't see," comparing it to self-driving cars that spent years mapping roads before automating.

Why it’s important for us:

Scribe is a great tool for anyone who wants to create SOPs across their firm. This was a natural next step for Scribe, and I really like the vision of the company.

I often see firms who try to solve problems by buying a new software. 95% of the time, that problem could be fixed with better internal processes, an automation, or an integration they haven’t considered. The other 5% of the time, new software may be the answer. But software implementations can be complex. Firms need well documented processes and preparation prior to implementation, and they need to train their teams well during and after implementation.

Some of the main benefits of SOPs:

1) Complete tasks with greater accuracy.

2) Complete tasks more quickly and timely.

3) Quickly train new team members and get them up to speed on actual tasks within the firm.

4) Better documentation for software implementations.

and my personal favorites…

5) Identify process improvements by highlighting inefficiencies, duplicate data entry, unnecessary software, and more.

6) Use as context to teach AI how to help you in your day-to-day workflows.

Scribe is now targeting #5 with Optimize. I suspect not every suggestion it makes will be perfect and the estimated ROI may only be a ballpark. But Scribe has compiled an extremely large amount of data from major companies across most industries, so this new offering will certainly improve over time. I’d imagine they’ll also tailor it to specific industries to make more targeted suggestions.

OpenAI ordered to turn over ChatGPT conversations

Source: Google Gemini Nano Banana

The New York Times sued OpenAI in December 2023, claiming the company used millions of copyrighted articles without permission to train its AI models. US Magistrate Judge Ona Wang ordered OpenAI on November 7 to produce 20 million anonymized ChatGPT conversations from December 2022 through November 2024. The order affects ChatGPT Free, Plus, Pro, and Team users, along with API customers without Zero Data Retention agreements.

OpenAI published a response calling the demand an invasion of user privacy. The company argued it would force them to "turn over tens of millions of highly personal conversations from people who have no connection to the Times' baseless lawsuit." OpenAI says affected chats will be de-identified to scrub personal information before being turned over to the Times' legal team, and the data is currently stored separately in a secure system accessible only to a small legal and security team.

OpenAI filed a motion on November 12 asking the court to reconsider, arguing the order sets a dangerous precedent where "anyone who files a lawsuit against an AI company can demand production of tens of millions of conversations without first narrowing for relevance."

Why it’s important for us:

This is an important case to follow for everyone using AI. We’re setting new legal precedents in nearly all of these cases against AI providers, and legal battles like this one are going to continue coming.

This particular case is noteworthy because the judge ruled that OpenAI must turn over user conversations for all ChatGPT plans besides Enterprise, and it looks like it’s going to stand. They’re currently expected to turn over the data by November 14th. However, OpenAI has scrubbed all PII and sensitive data from the conversations.

Regardless, this highlights why many accounting firms are nervous to share sensitive data with AI models. Despite the SOC 2 Type II certifications and other controls around data privacy, it appears as though there may be a legal precedent set that allows for the conversations to be saved and surfaced in a court of law.

Firms should continue to assess the risk for themselves and determine what kind of data they’re comfortable sharing with AI models. Ultimately, the data at hand here is currently only being shared with the NYT legal team and has been scrubbed to exclude PII. This also doesn’t change the fact that the AI providers are not training on conversations or data shared on the business plans or through their API.

This case will be one to monitor. But personally, I don’t think this changes anything for us right now. This is more of a data retention issue at the moment than a data privacy issue since all PII and private data has been scrubbed. It’s also clear that OpenAI and the judge are aware that data privacy is of major importance in this case.

PUT IT TO WORK

Tip or Trick of the Week

If you’re not currently using a meeting recording tool, I highly recommend it. Recording meetings is a high-value quick win for firms.

Right now, my two most recommended meeting recorders are Fireflies and Fathom, but there are also new accounting-specific meeting recorders becoming available.

Fireflies and Fathom both have APIs that let us build automations like the below to store our meeting notes and action items.

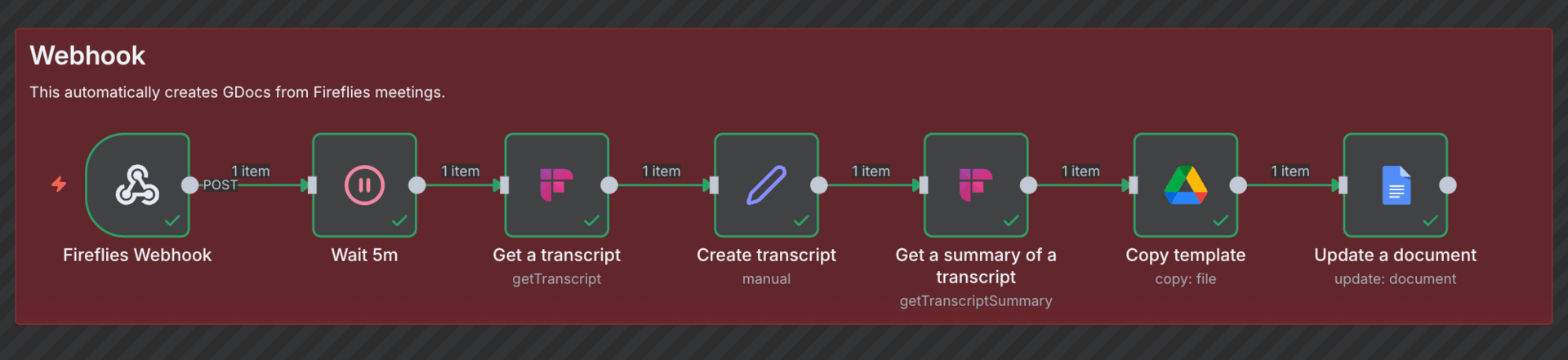

n8n automation that stores the Fireflies summary and transcript in Google Drive.

The above automation in n8n triggers when a Fireflies meeting recording ends. It waits 5 minutes to allow time for the AI-generated meeting summary and action items. It then uses Fireflies to grab the full meeting transcript and the AI-generated summary and action items. The workflow creates a Google Doc and saves the information into my specified meetings folder.

While Fireflies has AI features that let you chat with your meetings, I find that I prefer to have the data in a Google Drive where I can connect it to ChatGPT or Claude, which is where I’m often working on tasks that need the meeting context.

This same automation can easily be mapped to SharePoint as opposed to Google Drive.

You can also do a few other cool things that aren’t represented in my n8n workflow above:

Map the meeting transcripts into specific client folders.

Extract the action items and create tasks in your preferred task management tool (e.g., Notion, Asana, your practice management system, etc.).

Extract the AI-generated meeting summary and send it to your team via email, Slack message, Teams message, etc.

WEEKLY RANDOM

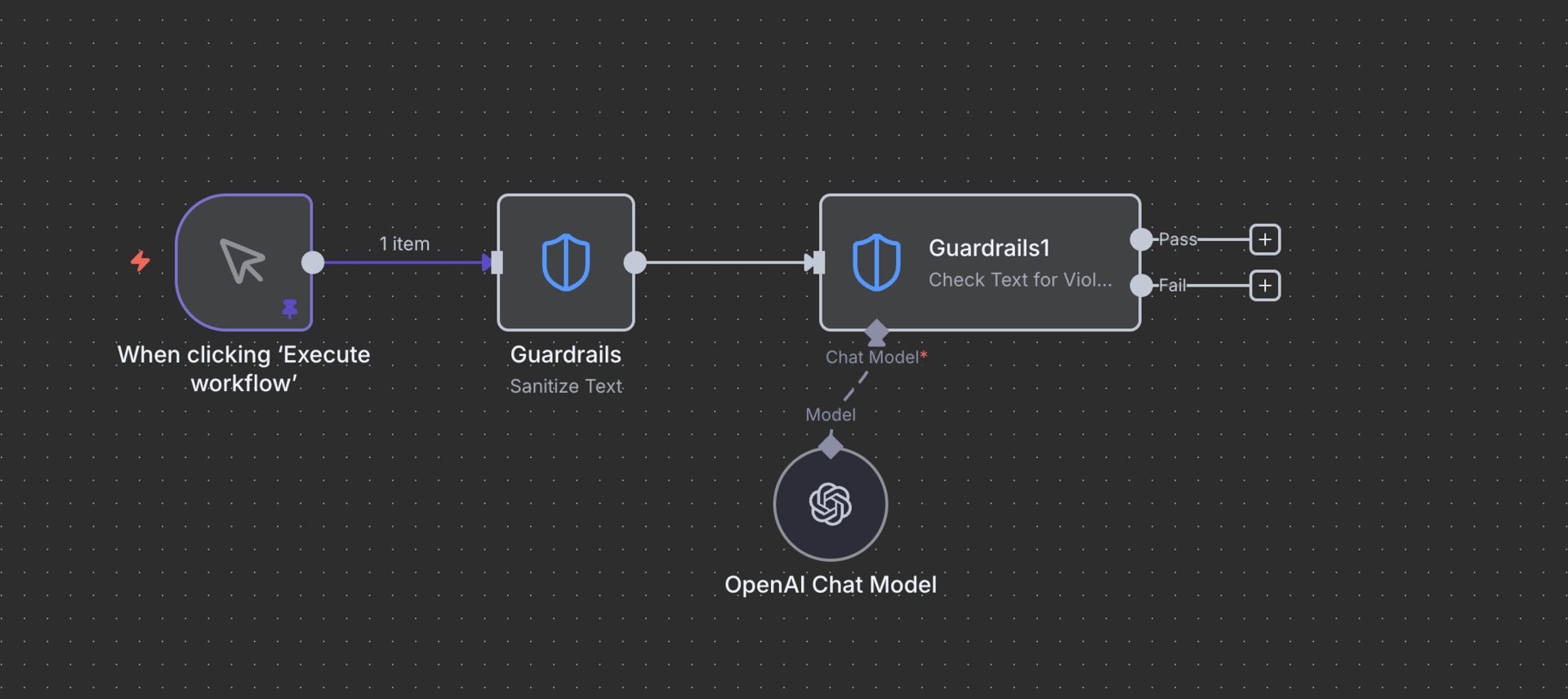

When OpenAI released Agent Builder about a month ago, one of the interesting nodes they included was called Guardrails. Their Guardrails node is a protection layer that helps identify and scrub PII, detect jailbreaks (i.e., when a prompt is trying to bypass safety measures for a model), and detect hallucinations from the AI model.

Turns out this must have been a good idea because n8n just stole it. n8n announced a new node called Guardrails that’s available to anyone who updates to the latest version (1.119.1 or later).

There are two operations within the n8n Guardrails node: (1) Check Text for Violations and (2) Sanitize Text.

Check Text for Violations: This allows you to pass or fail the information sent through the node based on custom filters for keywords, PII, NSFW language, secret keys (like API keys), topical alignment (i.e., staying on topic), and custom expressions. If the Guardrails node finds any disallowed information, the node sends the data through a Fail branch where you can perform custom actions or end the execution.

Sanitize Text: This allows you to identify and scrub information such as PII, secret keys, URLs, and custom expressions. It replaces the identified information with placeholders.

This is another important update for those worried about the information they pass through certain software, APIs, or AI models. While most tools you might use in your n8n workflow are secure, this adds another layer of protection for additional peace of mind.

Until next week, keep protecting those numbers.

Preston