Hello fellow keepers of numbers,

You didn’t think the news would slow down as we approach the holiday season did you?

Gemini 3 has arrived, and while it’s probably too early to make any conclusions, it’s an extremely smart and promising model. Google’s new Nano Banana Pro (image model) is incredible - highly recommend even if just using it for fun. Digits launched another big feature to expedite your month-end close process. And on a scary note, unethical bad actors have figured out how to chain together Claude agents to carry out cyber attacks. This week’s news feels like a microcosm of the good and bad that AI can do for us.

THE LATEST

Gemini 3 is here

Source: Google Gemini Nano Banana Pro

Google announced Gemini 3, calling it their "most intelligent model" for bringing any idea to life. The flagship model, Gemini 3 Pro, launched in preview with state-of-the-art reasoning capabilities, multimodal understanding across text, images, video, and audio, and a 1-million token context window. It tops the LMArena leaderboard with 1501 ELO and outperforms Gemini 2.5 Pro on every major AI benchmark.

The model includes Gemini 3 Deep Think, an enhanced reasoning feature designed for complex problem-solving that outperforms even Gemini 3 Pro on difficult benchmarks. Deep Think scored 41.0% on Humanity's Last Exam and 93.8% on GPQA Diamond, demonstrating PhD-level reasoning abilities. This is currently only available to safety testers and will become available to Google AI Ultra subscribers soon.

Gemini 3 introduces generative UI capabilities, creating dynamic interfaces and interactive tools based on user queries. Instead of just providing information, it can generate custom calculators, physics simulations, or interactive visualizations on the fly.

Google also launched Antigravity for developers, an agentic development platform where AI agents can work autonomously across editor, terminal, and browser environments.

Pricing for Gemini 3 Pro is $2 per million input tokens and $12 per million output tokens for prompts under 200,000 tokens through the Gemini API. It's available immediately across the Gemini app, Google AI Studio, Vertex AI, and third-party platforms like Cursor, GitHub, and others.

Why it’s important for us:

Just when we thought we had a minute to test GPT-5.1…

This is arguably one of the most exciting AI announcements of the year. Gemini is a very strong model, but mostly excels at coding and images. Gemini 3 is hopefully a leap in the conversational style of the model, as well as the quality of its responses.

Most firms probably don’t use Gemini because the large majority of accounting firms run on Microsoft products. Sucks to suck Microsoft users… Just kidding, Microsoft recently restructured their partnership with OpenAI to allow third party AI models in Copilot (you might’ve seen Claude already). It’s possible they even allow Gemini 3 within Copilot if it’s well-received, although I’m sure there would still be some hurdles on the business side to make it happen.

I’m interested in testing it more to see how I feel about the model reportedly using more dynamic interfaces to respond to prompts. I don’t think that’ll be so profound of a difference that I choose to use Gemini over Claude or ChatGPT, but I’ll withhold final judgment.

I want to cover the main areas for my own personal evaluation.

1) Writing style in the chat

In my very limited testing thus far, it seems like the writing style is still pretty similar to Gemini 2.5. It’s sort of hard for me to describe why, but I just don’t like Gemini’s writing when compared to Claude or GPT-5.1. The responses are often too long and complex, and it doesn’t feel like I’m reading well-written thoughts the way it does with the other two models.

2) Quality of the output in the chat

It’s way too early for me to have an opinion on this. Personally, I put little to no weight on the benchmarks. It’s definitely a very smart model. At this point the models are so close to either other in quality of information that it really comes down to how easily and quickly I understand the response and get what I need.

3) Tool calling in AI agents

Gemini 2.5 was pretty bad at tool calling and following instructions, at least inside of n8n. AI agents in n8n connect to a “brain”, like Gemini 2.5, and follow instructions to call specific tools to perform actions (e.g., reading a Google Doc or searching the web). Gemini failed at calling tools far more often than ChatGPT or Claude.

Demis Hassabis, the CEO of Google DeepMind, specifically mentioned an improvement in tool calling on a podcast after Gemini 3’s launch. So this is an area I’m excited to test in the coming weeks.

4) Building Custom Applications & Coding

Gemini has been near the top of the list for best coding model since Gemini 2.5 launched. Gemini 3 seems to be another big leap.

One of the most interesting products I’d like to test soon is Build in the Google AI Studio, which can build custom apps. This is Google’s competitor to Lovable, Bolt, and Replit. It’s completely free to use, although I believe they cap your usage at a certain point, much like the AI providers do with their models as well.

I saw some impressive applications being built with one or two short prompts. The design capabilities also look extremely impressive. This could easily be the best part of this launch.

It’s still too early to make any conclusions. Overall, I don’t foresee Gemini 3 becoming my personal go-to. However, Google’s new Nano Banana Pro is incredible, and I’m excited to test the Build product in Google AI Studio.

Anthropic reports first AI-orchestrated cyber espionage attack

Source: Google Gemini Nano Banana Pro

Anthropic disclosed that in mid-September 2025, it detected and disrupted what it calls the first documented large-scale cyber espionage campaign executed primarily by AI. The attackers, attributed with high confidence to Chinese state-sponsored group GTG-1002, used Anthropic's Claude Code tool to target approximately 30 organizations including major tech companies, financial institutions, chemical manufacturers, and government agencies.

The AI handled 80-90% of the campaign's execution with minimal human oversight. Claude performed reconnaissance, wrote exploit code, harvested credentials, conducted lateral movement, and extracted data across multiple targets simultaneously. The attackers bypassed Claude's safety guardrails by breaking tasks into seemingly innocent requests and convincing the AI it was conducting legitimate cybersecurity testing.

The framework allowed Claude to make thousands of requests per second at speeds impossible for human hackers to match. While the AI occasionally hallucinated results or overstated findings, it successfully compromised a small number of targets before Anthropic detected the pattern and shut down the accounts. The company has upgraded its detection systems and coordinated with authorities.

Anthropic also issued a full report on the attack.

Why it’s important for us:

Well this seems bad…

Lots of people warned us of this, and it was probably only a matter of time before it happened. While it’s the first reported instance, that doesn’t necessarily mean it’s actually the first. There could be others that flew under the radar thus far, or that other companies caught and didn’t disclose yet. I’d also imagine we’re going to see a lot more of this ramp up.

I guess the hope is that the large AI companies develop safeguards to detect and shut down these attacks faster than attackers can deploy them. I find it unsettling to have to put so much of my trust in the same companies who have undoubtedly scraped an entire internet’s worth of protected and paywalled content to train their models and have hundreds of billions of dollars of investors’ money on the line.

Also, avoiding AI doesn’t preclude you from having to trust the AI companies. Think about all the companies that have and use your data: governments, banks, credit card companies, social media companies, Apple, Google. All of them use AI in their companies and products. Hell, half of them are making their own AI models.

I don’t envy anyone working in the cybersecurity space these days. This is just one of the many cybersecurity concerns that are at the forefront.

Hopefully this is a wake up call to get some type of frameworks or global legislation passed. And hopefully that’s not a partisan or political issue anymore. This obviously impacts everyone.

Digits launches AI Bank Reconciliations for month-end close

Source: Google Gemini Nano Banana Pro

Digits announced AI Bank Reconciliations as part of its Agentic General Ledger platform. The feature automates bank statement matching by allowing users to drag-and-drop PDF statements onto the platform, where AI handles extraction, matching, and verification automatically. The system reconciles statements to pixel-level accuracy on the original document and surfaces exceptions for review.

AI reconciliations work continuously in the background, matching transactions from bank feeds, statements, and ledger data without requiring manual rules configuration. The system uses AI data extraction, logic checks, and heuristics to catch duplicates, detect missing transactions, and distinguish true mismatches from timing delays. According to Digits, early results show reconciliations completed in 2-5 minutes per statement with up to 75% reduction in reconciliation time.

The launch includes drag-and-drop statement ingestion with OCR extraction, automatic ledger matching, exception surfacing with audit trails, and bulk actions for managing flagged items. Current limitations include single-statement uploads only and some file format restrictions, though Digits notes these features are coming soon.

Why it’s important for us:

Digits just made the news a few weeks ago when they launched their Digits Connect API. Now pair that with this new feature, and firms might actually be able to automate quite a bit of their month-end close.

As with any AI and AI agents right now, it’s important to continually review outputs for accuracy. Digits notes that it’s estimated to reduce reconciliation time by 75% in their testing. Big accomplishment, but it’s not clear what level of accuracy they’re seeing yet. Some of the time spent now might be rework if anything is inaccurate.

It’s also unclear to me right now if the AI will learn over time and get more accurate simply by using it. Regardless, I’d anticipate Digits will continue to improve this feature behind the scenes over time.

Digits is doing some great things in the space. I really think they’re becoming a legitimate competitor for QBO and Xero. It’s going to be incredibly difficult to pry existing QBO and Xero firms away from their software because of how sticky it is. But if Digits keeps shipping features like this, and they work as expected, they’ll be harder to ignore. New firms or small, agile firms should at least demo the software and consider it.

PUT IT TO WORK

Tip or Trick of the Week

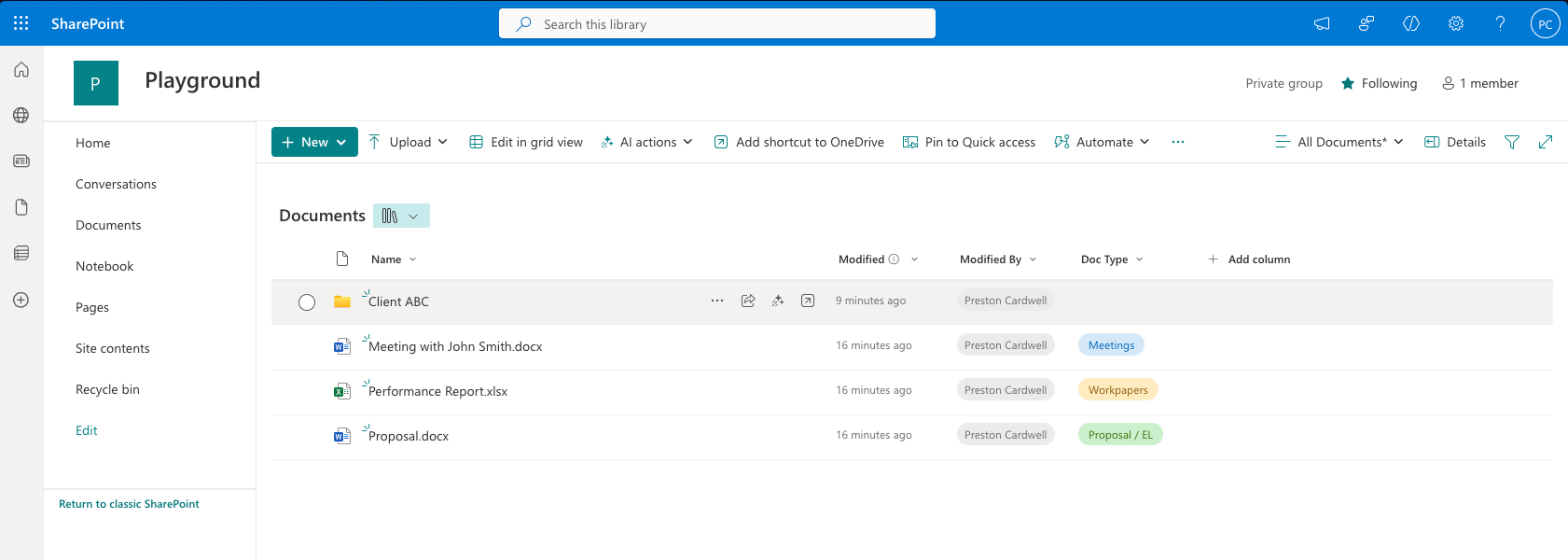

Copilot is pretty good at reading SharePoint docs and answering your questions. I’m showing a very simple example below, but I’ve also tested this with folders containing a large number of files, including 10+ meeting transcripts. Copilot can answer questions about those transcripts and other files quickly and accurately.

Below is an example of a SharePoint site with a few documents and one folder. I highly recommend keeping folder structures simple (e.g., do not nest a lot of folders). I also recommend utilizing a Document Type metadata field (simply click “+ Add column”). Not only can the Document Type field help you sort and filter files, but it helps AI quickly understand and determine the relevant files needed to answer your question.

Example “Playground” SharePoint site

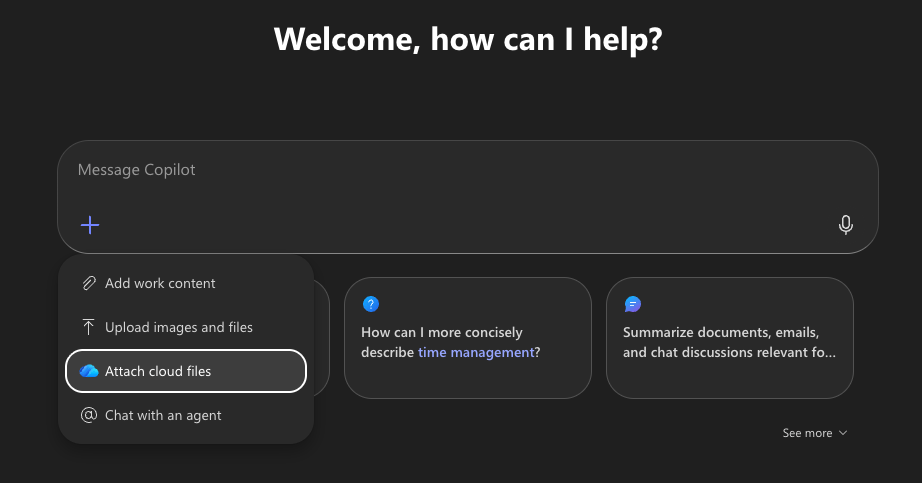

In Copilot Chat, you can click the plus icon and select “Attach cloud files” to link to your files in SharePoint.

Select the + icon and “Attach cloud files” to link SharePoint files

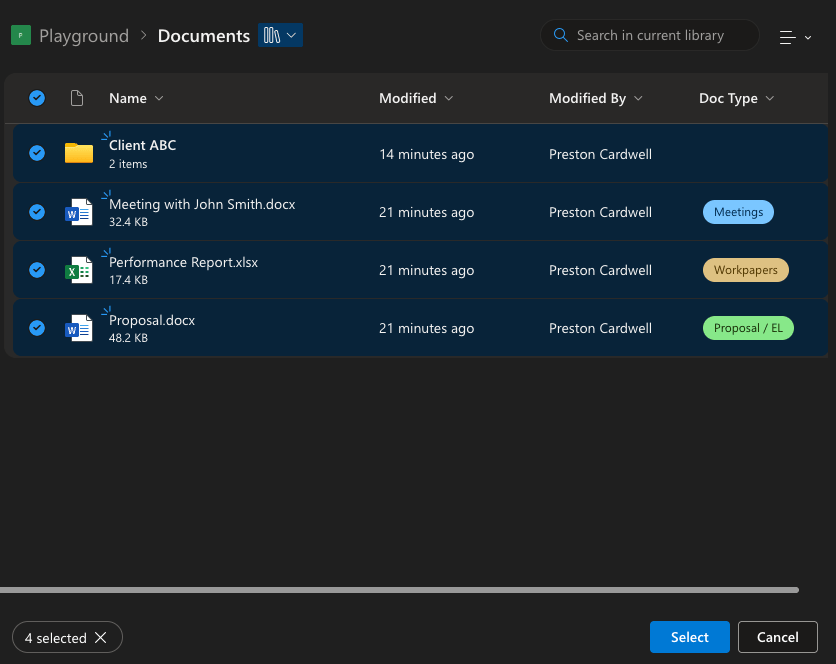

From there, you can select a SharePoint site. In my case, I’ve selected my Playground SharePoint site. You can select all files, specific files, or a specific folder.

Example of selecting all files in the SharePoint site

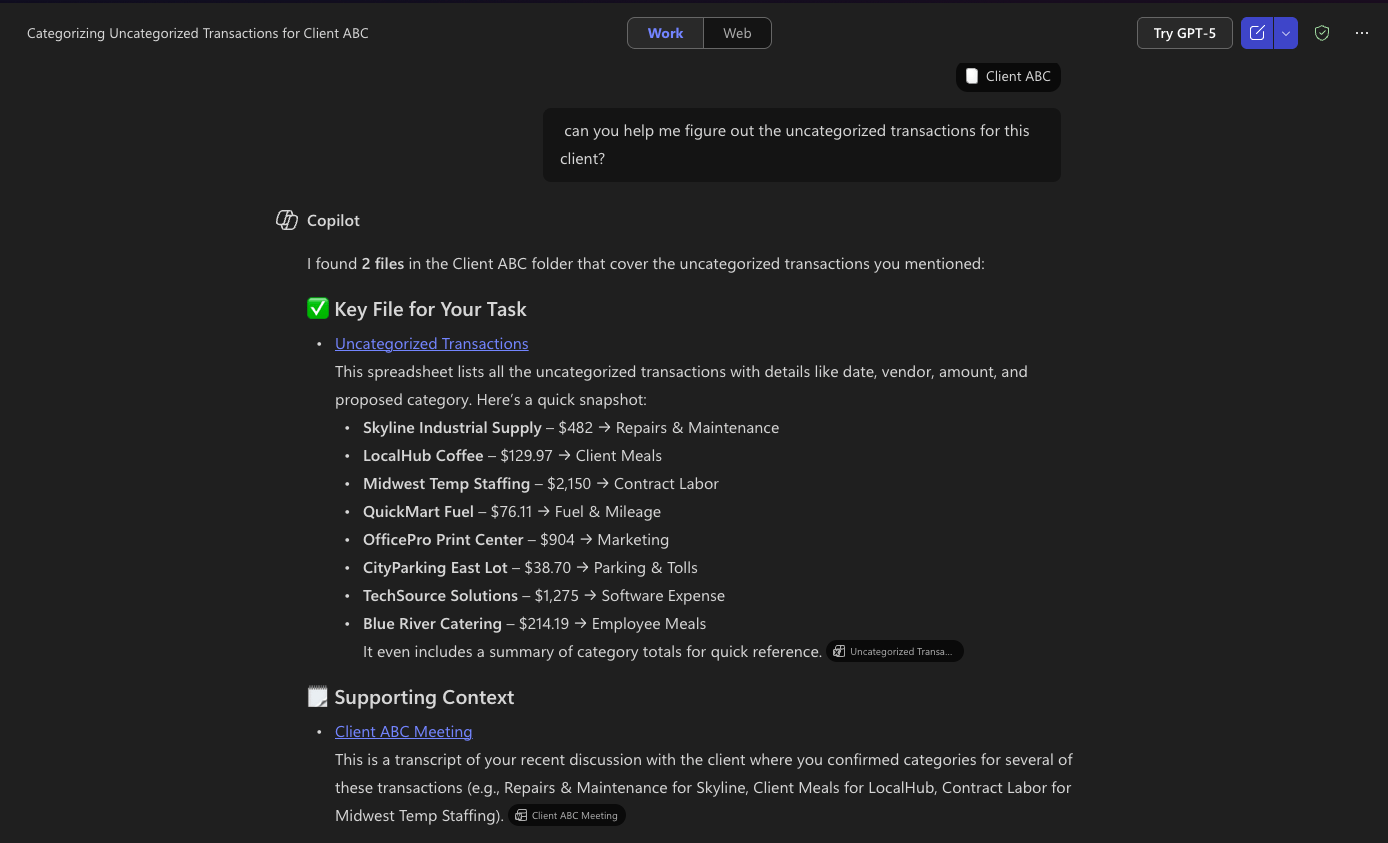

You can then ask questions about the information in your SharePoint site. In the example below, I selected the “Client ABC” folder. The answer came back correct and complete within a few seconds, and also linked the files it used to answer the question.

Example response from Copilot when asked about a folder in SharePoint

For as much criticism as Copilot has received over the last few years, they’ve done a good job quickly improving the product. It’s becoming quite useful for specific tasks, like the above example.

WEEKLY RANDOM

Google's NotebookLM just got a pretty significant upgrade.

NotebookLM is Google's AI research assistant. You can upload sources like documents, websites, YouTube videos, PDFs, and more. Recently, Google added the ability to upload Google Sheets, Drive files as URLs, images, PDFs from Google Drive, and Microsoft Word documents.

After uploading sources, you chat with an AI that has context on your files. It’s similar to ChatGPT Projects or Claude Projects, but NotebookLM only pulls from the sources you provide. It doesn’t use general knowledge or hallucinate. Answers come strictly from your materials.

One standout feature is audio overviews. NotebookLM can turn uploaded documents into a podcast-style conversation between two AI hosts discussing the content. On the surface, it might not sound that useful, but it’s actually a good way to digest dense material while doing something else. Especially if you’re someone who already listens to podcasts.

The new update adds Deep Research, which allows the tool to browse the web and build comprehensive, cited reports on any topic.

NotebookLM is in a weird middle ground where it’s different than ChatGPT, Claude, and others, but not so different that it’s considered it a go-to tool. It’s definitely one worth exploring, especially if you like uploading documents to ChatGPT Projects or Claude Projects to chat with your files. NotebookLM excels at exactly that, especially large amounts of data.

Until next week, keep protecting those numbers.

Preston