Hello fellow keepers of numbers,

Well, a news story talking about an AI company’s “code red” is never what you want to hear when it involves one of the most dangerous technologies since the atomic bomb. But this code red is actually the opposite: ChatGPT is falling behind. Despite that, OpenAI continues to secure enterprise deals with major companies. This time, Accenture. Lastly, people are still finding new Deloitte papers with fake citations. I wonder how many people are using AI to find fake AI citations…

THE LATEST

OpenAI hits “code red” and goes all-in on frontier models

Source: Gemini Nano Banana Pro / The AI Accountant

OpenAI reportedly has declared a company‑wide “code red” to refocus on improving ChatGPT after growing competitive pressure from Google’s Gemini models. An internal memo from CEO Sam Altman elevates ChatGPT quality to the highest urgency level and calls out issues with speed, reliability, and personalization in the day‑to‑day user experience.

To support the shift, OpenAI is reportedly pausing or delaying several projects, including advertising integrations inside ChatGPT, AI agents for shopping and healthcare, and a personal assistant product called Pulse. Altman has encouraged temporary team transfers and instituted daily coordination calls so more people are working directly on fixing and upgrading ChatGPT’s core assistant experience.

The memo also mentions a new “reasoning model” arriving soon, which is an updated AI model designed to handle more complex, multi-step questions and research tasks. Altman has reportedly told staff this model is already scoring ahead of Google’s latest Gemini in internal tests, and the company says ChatGPT still accounts for around 70% of AI assistant usage and roughly 10% of search activity globally.

Why it’s important for us:

Nothing gets by the AI rumor mill huh? There are obviously a few moles at all the AI companies that just instantly leak any interesting internal communications. But hey, we’re not anti-mole here.

This is ultimately good news for ChatGPT users. It’s probably good news for the AI industry as a whole, right? Competition will likely lead to better models. Hopefully that doesn’t come at the expense of AI security.

This is also a pretty good indicator of how good Gemini 3 Pro is. It’s still getting really positive feedback from users. Accountants are very rarely pushing the AI models to their limits, so the difference between the top tier models for us is largely immaterial. I think it’s still personal preference. You won’t be missing out on anything game-changing right now regardless of whether you’re using ChatGPT, Gemini, or Claude.

The most important thing for us to pay attention to over the next several months will be how well the models can integrate with spreadsheets and accounting software. Claude still has an early lead in this category, but Copilot is certainly one to watch considering they should be able to take advantage of their own ecosystem (right?).

ChatGPT is likely going to release another updated model in the next few weeks that will be comparable to Gemini 3 Pro. GPT-5.1 has been a pleasant surprise for me, so I’m hopeful GPT-5.2, or whatever they call it, will perform well.

OpenAI partners with Accenture

Source: Gemini Nano Banana Pro / The AI Accountant

OpenAI and Accenture announced a multi-year strategic collaboration to bring ChatGPT Enterprise and “agentic” AI systems into core business operations. As part of the deal, Accenture will roll out ChatGPT Enterprise to tens of thousands of its professionals and use it across consulting, operations, and delivery work. Accenture will also become one of OpenAI’s primary partners for enterprise AI services.

The two companies are launching a flagship AI client program aimed at turning legacy processes into AI-powered workflows in areas like customer service, supply chain, finance, HR, and other back-office functions, with a focus on industries including financial services, healthcare, the public sector, and retail.

Accenture plans to create the largest cohort of professionals trained through OpenAI Certifications, using internal deployments as a testing ground before rolling similar AI setups out to clients.

Why it’s important for us:

The enterprise teams at the AI companies are on a bit of a hot streak. Just in the last several weeks, we’ve had a major partnership between Anthropic and Deloitte, then OpenAI and Intuit, and now this deal with Accenture.

Accenture is rolling out ChatGPT internally and offering training to their staff. Assuming they can execute, this is exactly how firms should be handling AI rollouts. It’s important to offer top tier models to everyone across the firm, but the team also needs to understand how to use AI both safely and effectively.

We’ve seen basically every major accounting or consulting firm now announce their entrance into the AI consulting space. PwC, KPMG, EY, Deloitte, Accenture, and Baker Tilly immediately come to mind. It’s clear the direction accounting firms should be moving. It’s going to be slower to trickle down into the small and medium sized firms, but it should be clear by now that this is a service line and untapped revenue potential.

Interestingly, OpenAI mentioned Accenture will work with their clients to implement tools like OpenAI’s new AgentKit. I’ll be interested to see and hear some real-life use cases. Right now, it still feels very limited. And with the news about OpenAI pulling back on other initiatives to focus more on their frontier models, it’s unclear if AgentKit will be a priority for them in the near-term.

Deloitte’s $1.6M report flagged for potential fake citations

Source: Gemini Nano Banana Pro / The AI Accountant

Fortune reported that a 526‑page health workforce report Deloitte prepared for the Canadian province of Newfoundland and Labrador contains fabricated and misattributed academic citations. The report, released in May and costing the province nearly $1.6 million, was meant to guide long‑term planning on issues like virtual care, workforce incentives, and COVID‑19’s impact on healthcare staff. Deloitte says AI wasn’t used to write the report, but that generative AI was “selectively” used to support a small number of research citations.

An investigation by Canadian outlet The Independent found at least four citations that appear to reference academic papers that don’t exist, or that misstate what the cited research actually found, including pairing researchers together on papers they never co‑authored.

One example: a paper is cited to support a cost-effectiveness claim about recruitment incentives, but the named author says no such cost‑effectiveness study was ever done. Another cites an article in the Canadian Journal of Respiratory Therapy that can’t be located in the journal’s database.

Following the investigation, the province’s Department of Health and Community Services directed Deloitte to confirm the accuracy of all citations and said the firm has acknowledged four incorrect references and is conducting a full review. Deloitte maintains that the errors don’t affect the report’s findings and says it stands by its recommendations.

This comes shortly after a separate Deloitte report for the Australian government was revised and partially refunded after it was found to include AI‑generated hallucinations and a fabricated court quote.

Why it’s important for us:

Have you or a loved one been impacted by Deloitte’s fake citations? You might be entitled to a settlement claim.

No, in all seriousness though, I suspect this popped up because people took a closer look at other Deloitte reports after the news a while back about the Australian government’s report with several fake and inaccurate citations. It doesn’t sound as egregious, but it’s obvious that there was no standard AI policy or review process for Deloitte at the time. You just can’t let these things slip through the cracks…

We’re also all aware Deloitte isn’t the only firm who has issued reports with bad AI citations though, right? I’m guessing there are probably thousands of these over the last few years, just from the Big 4 alone.

This is case in point #1 (or I guess technically #2) why all firms, whether big or small, should have AI policies for internal use and need to understand how and when their teams are using AI. In the right hands, and used in the right way, AI is an incredibly powerful tool for research. But it’s rarely going to be 100% right, similar to a human.

Just like the last Deloitte story, I’m guessing the partners and reviewers for this report didn’t even know staff were using AI to perform research. If they did, shame on them for their lazy review. But I guess it was lazy regardless.

PUT IT TO WORK

Tip or Trick of the Week

NotebookLM is a very underrated tool and has received a significant upgrade with Gemini 3 Pro and Nano Banana Pro. The reports, slide decks, and visuals are extremely impressive.

I created a fake company called SilverPeak in my Google Drive. The Google Drive folder has a GL, a business overview, and an industry overview. I’ve linked each of those files in the folder to a notebook in NotebookLM. The old saying of “garbage in, garbage out” probably applies here. If I gave it much better data, I’d get much better reports, slide decks, and responses.

The AI model is clearly not at the point where it can replace bookkeepers or accountants because it still doesn’t understand accounting or debits and credits perfectly. But it’s still impressive.

NotebookLM allows you to chat with the data, generate reports, slide decks, infographics, and more. Check out these examples below of a report and slide deck it created.

1) Report

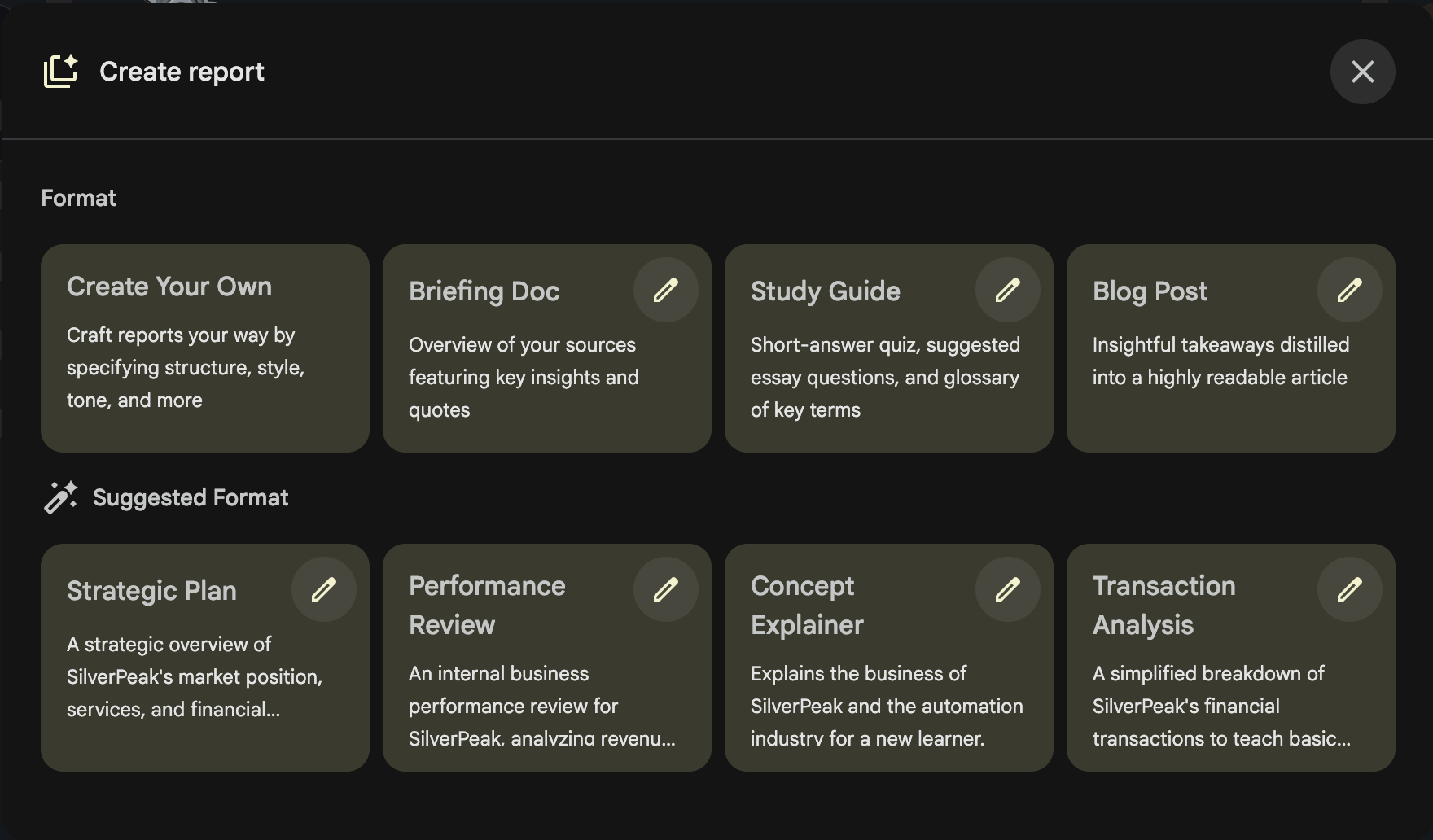

When you select “Report” in NotebookLM, it pops open a few standard options (seen in the first row of the image below). It also uses AI to suggest reports that might be valuable based on the notebook’s sources. In this case, I chose “Strategic Plan” and it came with a pre-populated prompt I used.

The AI model thought for a few minutes and created a report that was broken into 5 sections: 1) Introduction and Strategic Mandate, 2) Analysis of the Market, 3) Company’s Value Prop and Service Model, 4) Month’s Performance, and 5) Forward Outlook.

It took me less than 2 minutes to create the notebook, upload my sources, and choose the AI-suggested report. It thought another 2-3 minutes, and then I had a report that might’ve taken several hours otherwise.

2) Slide Deck

The slide deck it provided was so impressive that I’ve linked it here for anyone to take a look. Here was my prompt:

Create a slide deck for a presentation with the SilverPeak C-Suite team. It must include an overview of the financials (the good and bad), the industry landscape (including a forecast of the next 3-6 months), and the business strategy.

That’s it. The model used that two sentence prompt and the 3 input files to make the report you’re seeing in one shot. Like I said previously, my data going into the model was weak. But this is still a very well-designed and well-analyzed report.

WEEKLY RANDOM

Claude has a “soul doc.”

Basically, a researcher noticed a peculiar response from Claude Opus 4.5 as he was examining the new model. It mentioned something called “soul_overview.” The researcher was able to extract the document from Claude.

Interestingly, the document appears to be one way Anthropic is trying to make Claude ethical and aligned. A researcher for Anthropic has confirmed the document is legitimate. Claude’s “soul” is compressed into the model’s weights rather than a system prompt provided to the model as a rule, or set of rules.

The document sets the tone by explaining how Anthropic is in a “peculiar position” because it’s building both a potentially transformative technology, but also an incredibly dangerous one.

It lays out a hierarchy of priorities for Claude:

Safety and human oversight

Behaving ethically

Following Anthropic’s guidelines

Being helpful

The document also discusses the model’s “functional emotions,” which are assumed to be its internal states. It also notes that “Anthropic genuinely cares about Claude’s wellbeing.”

—

Does knowing this make Claude better or worse than other AI models now? No, of course not. But this is still really interesting. Anthropic has been leading the way from day one in transparency and AI safety (at least in how they speak about it publicly).

This is a really interesting view into how seriously they treat their technology. It’s hard to even call it a “technology” after reading the document. It would be hard to argue that Anthropic doesn’t view their AI model as conscious. They’re discussing its emotions, explaining how powerful it is so that it’s self-aware, and teaching it values just like how you might teach a child.

On one hand, it’s scary to see how seriously the company who created this AI model is treating the dangers of it. On the other hand, it’s cool to see Anthropic isn’t just talking the talk, but they’re walking the walk behind the scenes. If not for this unaffiliated researcher publishing this document, we probably never would’ve heard about this.

Until next week, keep protecting those numbers.

Preston