Hello fellow keepers of numbers,

Happy New Year! Hope everyone enjoyed their holidays. Welcome to 2026, where AI is still a thing.

Microsoft CEO, Satya Nadella says what we all think: Copilot kind of sucks right now. Claude Skills get some upgrades and become an AI industry standard, already adopted by OpenAI. And Salesforce discusses how they see the future of AI agents.

Also, stick around for some tips on using n8n’s AI to automatically build a workflow and a fun test comparing Gemini’s Nano Banana Pro to ChatGPT’s Image 1.5.

THE LATEST

Microsoft CEO says Copilot integrations “don’t really work”

Source: Gemini Nano Banana Pro / The AI Accountant

The Information reported that Microsoft CEO Satya Nadella has personally stepped in to fix Copilot’s integrations with Gmail and Outlook after calling them “not smart” and saying they “for the most part don’t really work.” He’s been sending direct bug reports, running weekly product reviews with top engineers, and pushing teams to improve reliability for real-world email and calendar use, not just demos.

Nadella and his deputies reportedly want Copilot to function as a true “digital worker,” handling tasks like inbox triage, meeting summaries, and scheduling. However, the current integrations aren’t delivering consistent results in those day-to-day workflows. Slower-than-expected enterprise rollouts and customer questions about value have also pushed Microsoft to introduce a lower-priced $21/month Copilot tier for businesses under 300 seats to try to boost adoption.

To close the gap, Nadella has reportedly delegated some other responsibilities so he can focus more on AI product development. He is also heavily involved in recruiting senior AI talent from labs like OpenAI and Google DeepMind, according to the same report. His internal comments also reference Google’s Gemini gaining ground with tighter integrations to Google Drive, increasing competitive pressure on Microsoft to get Copilot’s cross-service email and calendar experience working reliably.

Why it’s important for us:

In a 2025 full of major advancements for ChatGPT, Claude, and Gemini, the biggest news for Copilot was integrating those models into their product. It’s no secret that Copilot is extremely far behind the other AI providers.

It doesn’t perform nearly as well as other AI models, doesn’t integrate well across the Microsoft stack yet, and is difficult for firms to implement firm-wide without the proper support of experts. The only key differentiator for Copilot right now is the built-in security layer that Microsoft offers.

While the CEO, Satya Nadella, stepping in to pull internal focus onto Copilot is a good thing for Copilot users, it’s hard to imagine much is going to change in 2026. This is trending to be the year of AI adoption and growth for the accounting industry. If Copilot doesn’t improve soon, it’s going to be time for firms to evaluate integrating a different major model into their tech stacks.

Claude Skills go org-wide (and cross-platform)

Source: Skills for organizations, partners, the ecosystem / Anthropic

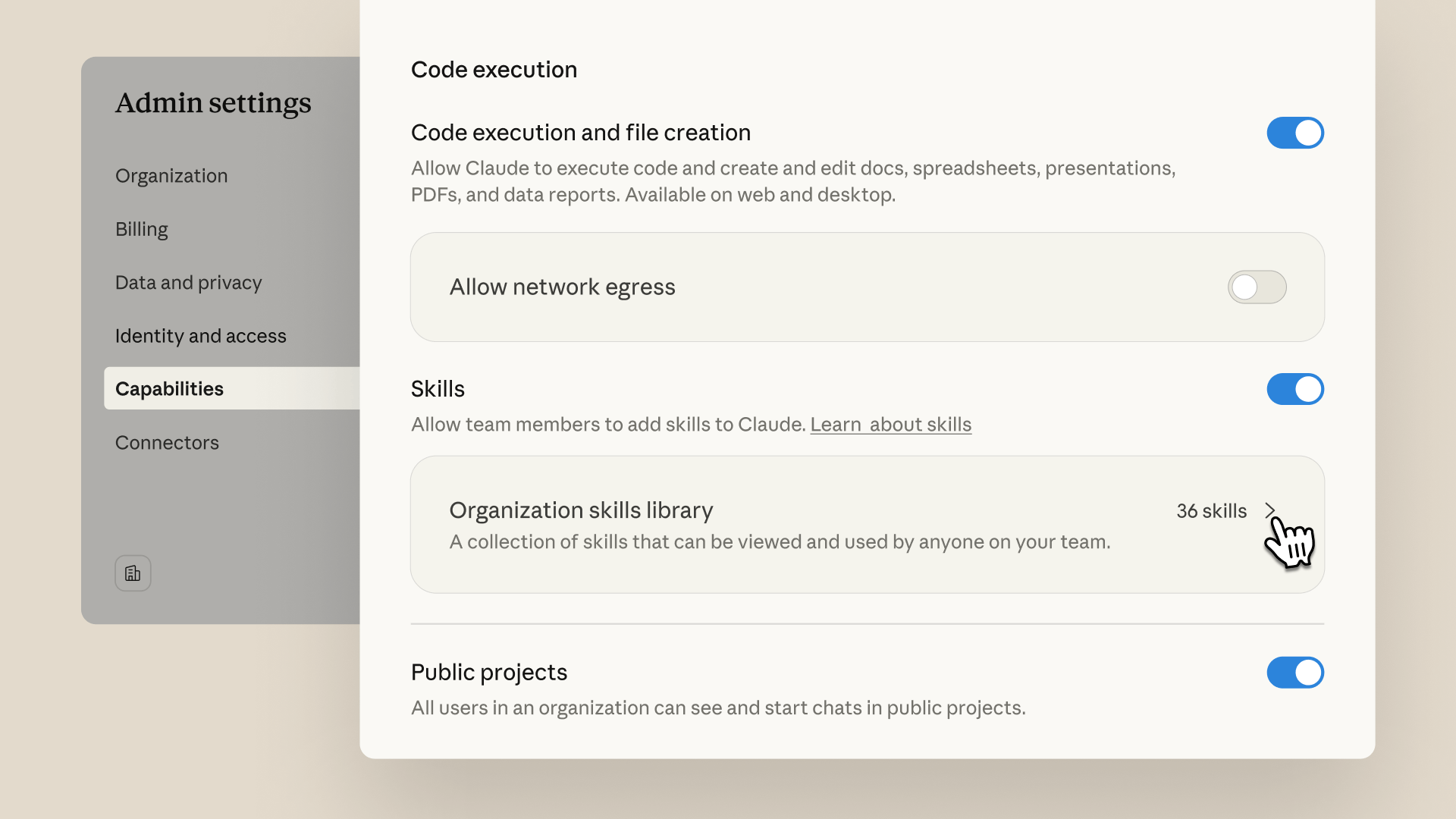

Anthropic expanded Claude “Skills” to work at the organization level and introduced new tools for managing them across teams. Skills are reusable folders that contain a “SKILL.md” file with instructions plus optional code, templates, and resources that teach Claude how to perform a task the same way every time. They’re now supported across Claude, Claude Code, the Agent SDK, and the Claude API.

With the update, admins on Team and Enterprise plans can create and manage Skills centrally, then provision them to everyone in the organization. Individuals can toggle specific Skills on or off. Anthropic also launched a partner Skills directory with prebuilt workflows for tools like Notion, Canva, Figma, Atlassian, Stripe, and Zapier, all accessible from a new Skills hub in the Claude interface.

Anthropic also helped publish Agent Skills as an open standard so that Skills aren’t locked to Claude. The spec defines a simple format: a folder with a “SKILL.md” file that includes metadata (name, description, optional compatibility info) and step-by-step instructions, plus optional scripts and reference files. It’s designed around “progressive disclosure,” where the model first sees just short metadata, then only loads full instructions or code when a task actually needs that Skill, which helps keep context usage and costs under control.

For enterprise customers, Skills are included at no extra charge on paid Claude plans, with standard API pricing applying when used programmatically. Admin controls allow organizations to restrict which Skills are available, manage access to Skills that include executable code, and monitor Skills usage across the org, giving IT and security teams more oversight over how AI workflows are deployed.

Why it’s important for us:

This is probably going to fly under the radar, but ‘Skills’ are going to play an important role in the next generation of AI agents.

I’m going to break down what ‘Skills’ are, why the rollout to organizations is important, and how the open standard they’ve created might impact you.

(1) Skills

Skills are very similar to SOPs. Think of it as an SOP for an AI. It attempts to turn “how we do things at our firm” into something AI can follow reliably.

For example, if you have a specific engagement letter template, you might create a skill called “Prepare engagement letter for new client.” This skill would likely draw on notes and/or meeting transcript(s), grab an engagement letter template, and drop in the required scope, timing, deliverables, pricing, etc. into the engagement letter template.

The purpose of a skill is to break down the workflow into manageable tasks with very deterministic outcomes. This usually means the AI model is confined to very specific instructions, which makes the output far more accurate and consistent.

(2) Skills for organizations

With this update, one person can create a skill and deploy it to the entire firm. As team members begin creating their own Claude Skills, they can each deploy them so the firm develops a library of skills. Each person can choose to activate the skills relevant to their workflows.

(3) Agent Skills open standard

Anthropic has published a standard for how all ‘AI skills’ should be created. This ensures that skills created for Claude can be easily used by ChatGPT, Gemini, etc. and vice versa. If your firm uses multiple AI models or switches models down the line, this is extremely important for simplicity of utilizing what you’ve already built.

The open standard has already been adopted by OpenAI. They’ve just recently added support for reusable “agent skills” in Codex, its AI coding assistant.

While this may not feel like a major announcement at the moment, the next generation of AI agents will be run by these types of deterministic systems built on the backbone of skills with very well-defined instructions. Keep an eye out for more developments around this.

Salesforce puts Agentforce on a stricter rulebook

Source: Gemini Nano Banana Pro / The AI Accountant

Salesforce published a year-end update explaining a strategic shift in how its Agentforce 360 platform handles enterprise automation. Instead of letting large language models (LLMs) act as free-roaming “agents,” Salesforce is now emphasizing a hybrid approach: LLMs handle intent and natural language understanding, while critical actions are executed through deterministic, rule-based workflows.

In plain terms, the AI can interpret what a user wants. The actual steps, like updating records, triggering refunds, or changing account status, run through predefined business logic. Salesforce frames this as necessary for “last-mile” deployment in high-stakes workflows where errors aren’t acceptable, such as financial transactions or regulated processes.

To support this, Salesforce is also leaning into observability, tools that let customers watch how AI agents reason and act step-by-step. New Agentforce observability capabilities provide session tracing and dashboards so teams can see each input, model call, and action, and then debug or add guardrails when agents drift from intended behavior.

Early adopters like 1-800Accountant are using these tools to monitor agents that handle complex tax questions and scheduling, giving them granular audit trails over how AI interacts with sensitive client data.

Why it’s important for us:

I’ll be honest, I strategically placed this piece of news to make me look smart. This is exactly why I think frameworks like agent skills will be the next evolution of AI agents and successfully deployed AI across enterprises and firms.

AI agents struggle in two major areas right now. First, AI rarely has all the context a human employee has. Even if it gets context, it’s typically not enough to be completely autonomous for an entire workflow. Second, the “free-roaming agents” that Salesforce mentions results in lower accuracy. And chaining together actions by an AI agent typically results in sub-85% workflow accuracy (oftentimes, much lower). This isn’t nearly accurate enough for actual business use cases.

Think of it like this:

A free-roaming agent is like telling someone "Drive from Houston to Dallas." They've got a destination, but they're figuring out every decision on their own. Maybe they take a wrong turn and don't realize it for 30 miles. Maybe they end up somewhere wondering what went wrong.

A structured agent is turn-by-turn directions. Each instruction is a script: "Drive 12 miles, then exit here." The driver executes that one step. GPS confirms they made it. If they missed the exit, it doesn't barrel forward hoping for the best. It reroutes based on where they actually are and recalculates the path.

The GPS is the agent that sequences the instructions, confirms each one worked, and adjusts when something goes wrong.

Think of Agent Skills as the scripts or instructions. They’re guardrails that constrain the autonomous agent to provide more accurate and consistent results. If we follow each instruction when driving from Houston to Dallas, we’ll be able to get to our destination nearly 100% of the time, and we’ll do so in a very predictable timeframe.

PUT IT TO WORK

If you’ve been wanting to try n8n (or any automation software) but don’t know where to start, now might be a good time to try.

Recently, n8n released their Build with AI functionality. It allows you to prompt n8n to build a workflow. The n8n AI uses your request to design the workflow, add nodes to the canvas, connect the nodes, connect your accounts (if already saved in your n8n credentials), create prompts for AI calls or AI agents, and validate the workflow.

Build with AI in n8n

You can see below an example I created as an email inbox manager who triages emails into predefined labels.

Here is my prompt to n8n AI:

Create a Gmail inbox triage agent that reads my emails and categorizes them as Urgent, Clients, Internal, Marketing, and Spam. Urgent is an important request that I need to respond to asap. Clients are other client messages that are not urgent. Internal is anything from my team (my domain). Marketing is any sales related requests, newsletters, or conferences that are applicable to my business. Spam is anything unrelated to my business, generic sales requests, phishing attempts, etc.

My domain is prestoncardwell.com.

The agent spent about 2-3 minutes working to develop my workflow. You can see its chain of thought and actions in the chat.

Email categorization workflow built by n8n AI

It populated the nodes on the canvas with the proper expressions and parameters for each node. You can see it also created my prompt for the Email Categorization Agent.

System prompt for Email Categorization Agent in n8n

User message prompt for Email Categorization Agent in n8n

Subsequently, I’d need to connect accounts to the nodes that haven’t already been correctly connected or where n8n didn’t already have a credential stored for my account. You can see in the chat from one of the previous screenshots that the n8n AI is asking for my Anthropic credentials. I could provide those to the agent or save them in my n8n credentials and ask the agent to make updates.

This is a fairly simple workflow, but easily would’ve saved me 15-30 minutes of building and testing. It’s also great for n8n newbies who may not know how to build this type of workflow yet.

WEEKLY RANDOM

OpenAI announced GPT Image 1.5, the new model behind ChatGPT Images and the gpt-image-1.5 API, with up to 4x faster generation, stronger instruction-following, and higher-quality edits. This is intended to be their product in direct competition with Gemini’s Nano Banana Pro.

For fun, I decided to test myself on some weird, creative prompts. I tested the prompt below two times for each AI model. Here are a few of the results.

Prompt: Create an image of an anthropomorphic lion wearing oversized round glasses and a cardigan sweater, browsing DVDs in a Blockbuster, shot on a 1990s VHS camcorder.

ChatGPT Image 1.5:

ChatGPT anthropomorphic lion side-by-side tests

Gemini Nano Banana Pro:

Nano Banana Pro anthropomorphic lion side-by-side tests

The prompt was vague enough that I wanted each AI model to make some assumptions. You can see that ChatGPT chose to go with grainier images and a much more realistic lion while Gemini chose to go with clearer images and a lion that looks like it belongs in some type of animated movie.

The first Gemini result was pretty bad. I should note, I left it on the “Fast” model for that output. The second Gemini result was set to “Thinking” and was much better. The Blockbuster logo was nailed perfectly and you can see the lion holding a very realistic Pulp Fiction DVD box, which was a nice touch.

Overall, I prefer the ChatGPT images because it nailed the style of a grainy 1990s VHS camcorder. I also like the design the model chose to use for the lion.

Obviously, these images aren’t necessarily applicable to an accounting firm’s day-to-day work. Nano Banana Pro is really good at creating charts and visuals that might be useful to summarize financial data. However, I’ve noticed that occasionally the model hallucinates numbers if you attach a file with multiple tabs.

ChatGPT almost refuses to use its image model any time you ask it to analyze a file and provide charts and visuals. It defaults to providing a PDF, which isn’t necessarily a bad thing, but just annoying when testing the image model for its charting and visual capabilities.

Until next week, keep protecting those numbers.

Preston